Design Patterns for LLM Systems & Products

Hey friends,

It's been a while since my last email and that's because today's post (Design Patterns for LLM Systems & Products) took waaay longer than I expected. What I had imagined to be a 3,000 word write-up grew to 12,000+ words—as I researched more into these patterns, there's was just more and more to dig into and write about. Thus, because today's piece is so long, I've only included the introduction section, with a link to the full post. Enjoy!

I appreciate you receiving this, but if you want to stop, simply unsubscribe.

• • •

👉 Read in browser for best experience (web version has extras & images) 👈

This post is about practical patterns for integrating large language models (LLMs) into systems and products. We’ll draw from academic research, industry resources, and practitioner know-how, and try to distill them into key ideas and practices.

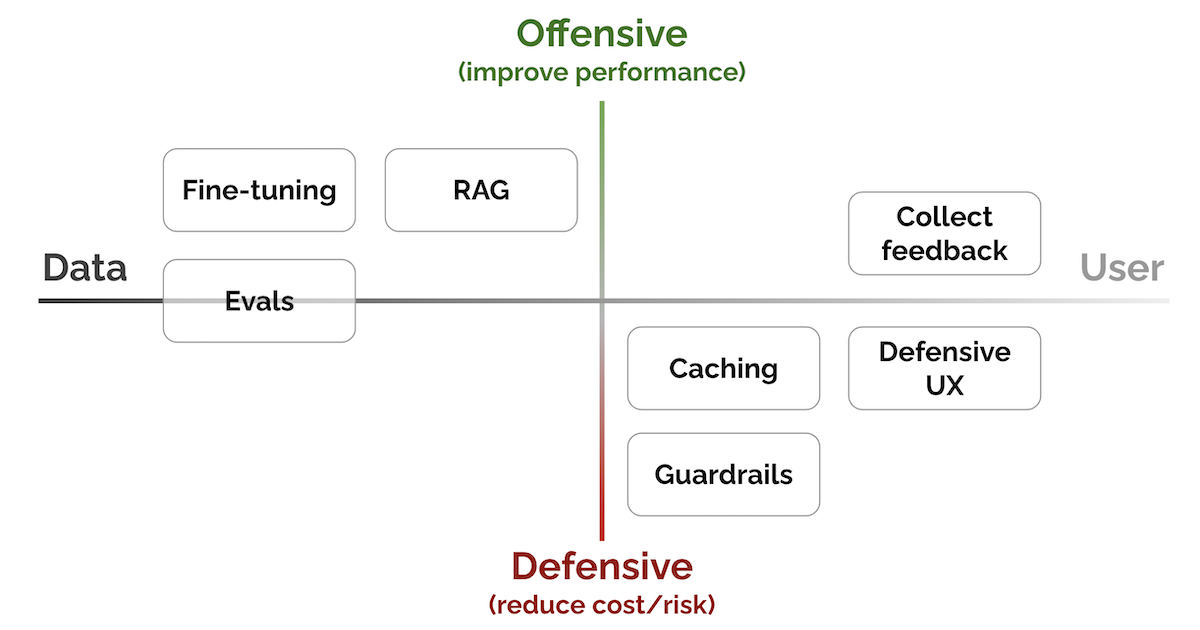

There are seven key patterns. I’ve also organized them along the spectrum of improving performance vs. reducing cost/risk, and closer to the data vs. closer to the user.

- Evals: To measure performance

- RAG: To add recent, external knowledge

- Fine-tuning: To get better at specific tasks

- Caching: To reduce latency & cost

- Guardrails: To ensure output quality

- Defensive UX: To anticipate & manage errors gracefully

- Collect user feedback: To build our data flywheel

|

LLM patterns: From data to user, from defensive to offensive

Eugene Yan

I build ML, RecSys, and LLM systems that serve customers at scale, and write about what I learn along the way. Join 7,500+ subscribers!

Hey friends, Just got back from the AI Engineer World's Fair and it was a blast! I had the opportunity to give the closing keynote, as well as host GitHub CEO Thomas Dohmke for a fireside chat. Along the same lines, I've been thinking about how to interview for ML/AI engineers and scientists, and got together with Jason to write about the technical and non-technical skills to look for, how to phone screen, run interview loops, and debrief, and some tips for interviewers and hiring managers....

Hey friends, Recently a couple of friends and I got together to write about some challenges and hard-won lessons from a year of building with LLMs. One thing led to another and this is now published on O'Reilly in three sections: Tactics: Prompting, RAG, workflows, caching, when to finetune, evals, guardrails Ops: Looking at data, working with models, product and risk, building a team Strategy: "No GPUs before PMF", "the system not the model", how to iterate, cost We have a dedicated site...

Hey friends, I've been helping teams with their prompts lately and was sad to see how they didn't have a good understanding of the basics, even as they reached for advanced techniques and complicated prompting tools. This spurred me to write this piece on the fundamentals of prompting. By mastering these, we should get 80 - 90% of they way to the optimal prompt. Aside: My friend Hamel Husain is organizing an LLM Conference + Finetuning Workshop: 11 talks by world-class practitioners like...