Hey friends, I've been thinking and experimenting a lot with how to apply, evaluate, and operate LLM-evaluators and have gone down the rabbit hole on papers and results. Here's a writeup on what I've learned, as well as my intuition on it. It's a very long piece (49 min read) and so I'm only sending you the intro section. It'll be easier to read the full thing on my site. I appreciate you receiving this, but if you want to stop, simply unsubscribe. 👉 Read in browser for best experience (web...

about 2 months ago • 4 min read

Hey friends, Just got back from the AI Engineer World's Fair and it was a blast! I had the opportunity to give the closing keynote, as well as host GitHub CEO Thomas Dohmke for a fireside chat. Along the same lines, I've been thinking about how to interview for ML/AI engineers and scientists, and got together with Jason to write about the technical and non-technical skills to look for, how to phone screen, run interview loops, and debrief, and some tips for interviewers and hiring managers....

3 months ago • 17 min read

Hey friends, Recently a couple of friends and I got together to write about some challenges and hard-won lessons from a year of building with LLMs. One thing led to another and this is now published on O'Reilly in three sections: Tactics: Prompting, RAG, workflows, caching, when to finetune, evals, guardrails Ops: Looking at data, working with models, product and risk, building a team Strategy: "No GPUs before PMF", "the system not the model", how to iterate, cost We have a dedicated site...

4 months ago • 1 min read

Hey friends, I've been helping teams with their prompts lately and was sad to see how they didn't have a good understanding of the basics, even as they reached for advanced techniques and complicated prompting tools. This spurred me to write this piece on the fundamentals of prompting. By mastering these, we should get 80 - 90% of they way to the optimal prompt. Aside: My friend Hamel Husain is organizing an LLM Conference + Finetuning Workshop: 11 talks by world-class practitioners like...

5 months ago • 9 min read

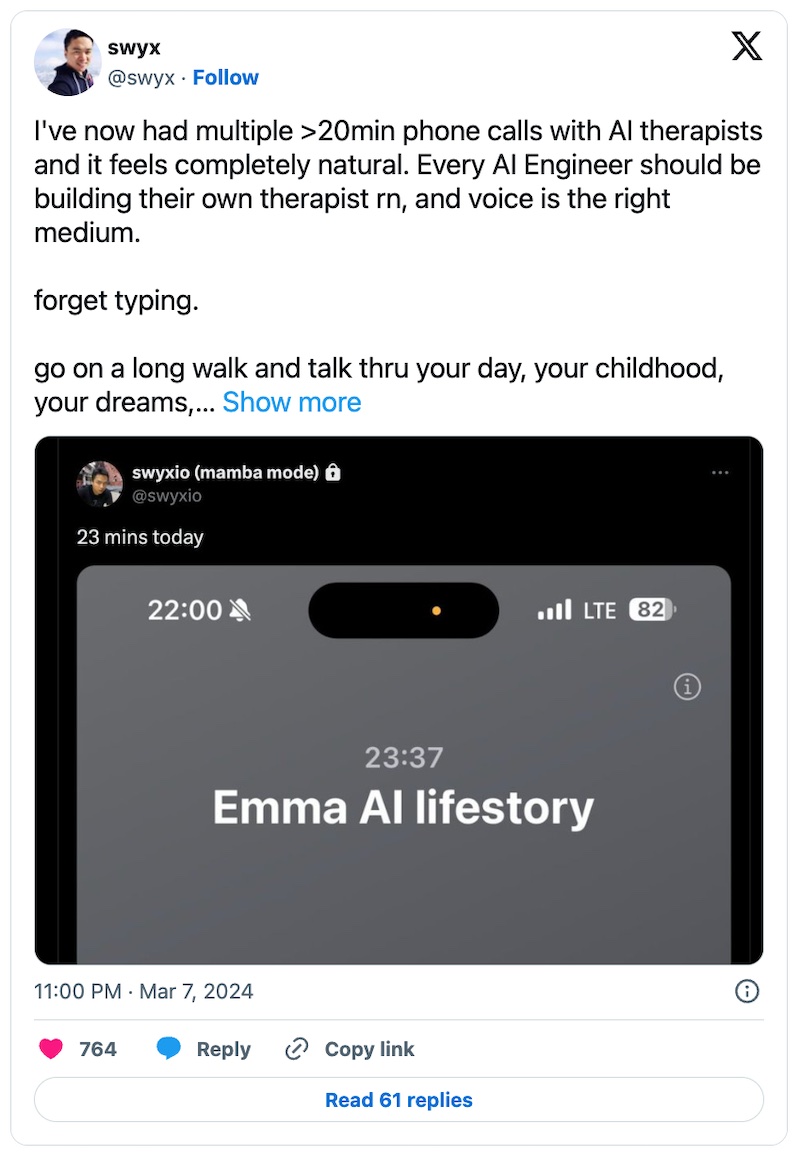

Hey friends, This week, I share about how I built Tara, a simple AI coach that I can talk to. I was initially skeptical of voice as a modality—Siri and other voice assistants didn't work so well for me. But after building Tara, I'm fully convinced. The post includes a phone line to Tara. Enjoy! I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 I suffer from monkey mind, chronic imposter...

6 months ago • 3 min read

Hey friends, I've been thinking a lot about evals lately, and trying dozens of them to understand which correlate best with actual use cases. In this write-up, I share an opinionated take on what doesn't really work and what does, focusing on classification, summarization, translation copyright regurgitation, and toxicity. I hope this saves you time figuring out your evals! I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best...

7 months ago • 21 min read

Hey friends, This week we discuss how to overcome the bottleneck of human data and annotations—Synthetic Data. We'll see how we can apply distillation and self-improvement across the three stages of model training (pretraining, instruction-tuning, preference-tuning). Enjoy! I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 It is increasingly viable to use synthetic data for pretraining,...

8 months ago • 24 min read

Hey friends, A short post of my 2023 in review. I'll go through the goals I set in 2022, some highlights for the year (e.g., diving into language modeling), goals for 2024, and some stats from 2023. If you have a review of your own, please reply to this with it—I'd love to read it. I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 2023 was a peaceful year of small, steady steps. There...

10 months ago • 6 min read

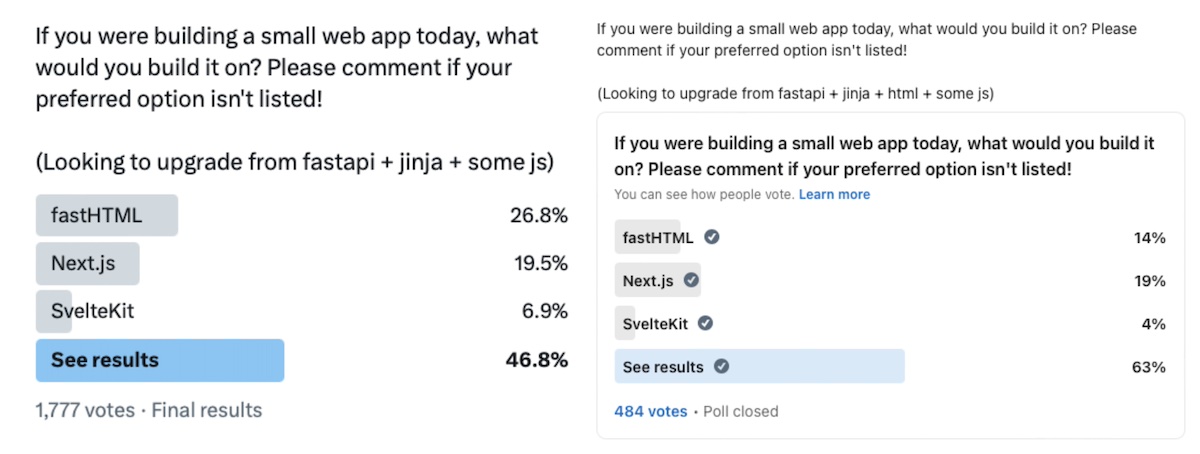

Hey friends, I've been digging into various push notification systems from companies like Duolingo, Twitter, Pinterest, and LinkedIn and it's been fascinating. On the surface, push seems similar to regular recsys. But as we dig deeper, we see that the user experience and implementation differs a fair bit. In this piece, we'll discuss how push differs from regular recsys, and how we choose what items to push or not push, and how often to push. Hope you're having a great vacation and a good...

10 months ago • 13 min read